Problem

Overwhelmingly Digital-Only AI tools for AI Education

Overwhelming amoung of AI education tools are digital or virtual only. Users feel a lack of agency when typing prompts into a black box. We needed to investigate if tangible making (through physical models) could serve as a more intuitive bridge for AI literacy than text-based prompting alone. We also study the AI keyframe animation as a performance for the model to its potential in making it 'alive'.

Pathways to Community Centric AI?

While AI development pace surpasses a lot of traditional tech development, there are growing calls with researchers, scholars and administrators for community-led, driven and owned AI, constrary to concentration of AI work happening in the most powerful tech firms. We think about what are the ways in which we invite the community, in its grassroots eco system to introductory, engaging encounters with AI to get back the sense of power to the community over this development.

Design

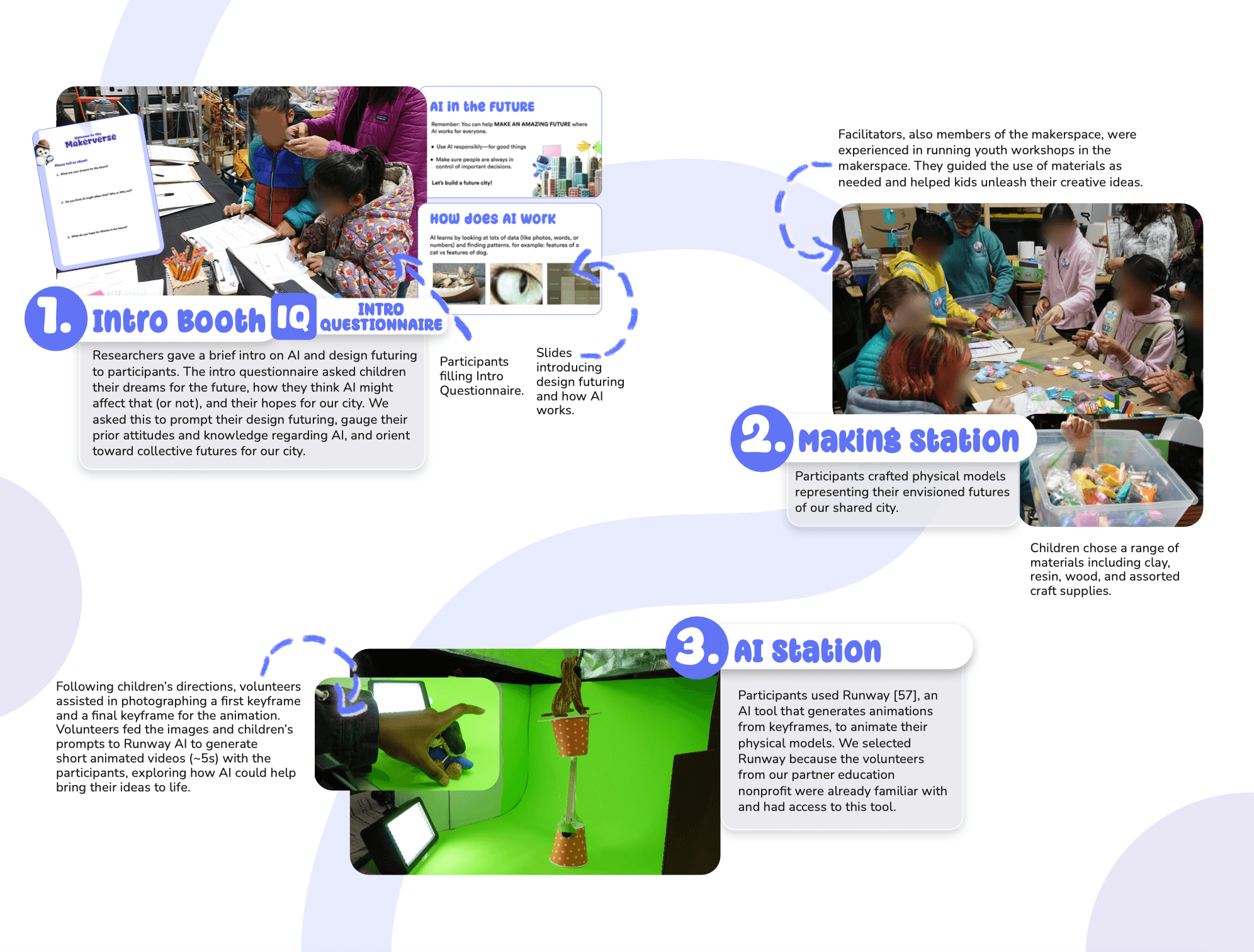

(A flow flow for the workshop booths. Soutce:Pictorial for ACM TEI 26)

The Process: "Thinking Through Making"

We rejected the traditional focus group format in favor of Pariticpatory Making and Design Futuring. We designed a 5-step user journey that moved participants from abstract ideation to concrete prototyping, and finally to AI augmentation.

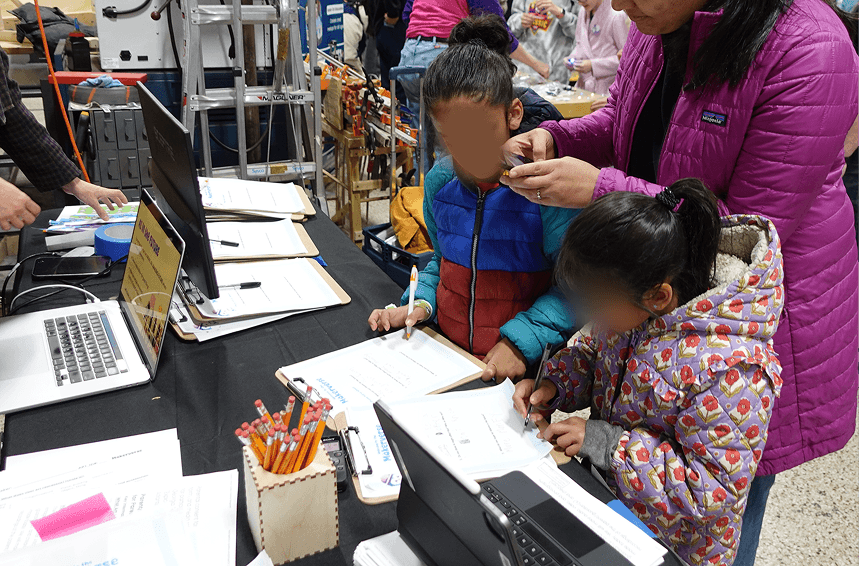

Station 1: The Intro

Intro Questionnaire

Goal: We asked about their current feelings toward AI and their "blue sky" hopes for the city.

Analysis: Pre-workshop baselines

Station 2: Making

Activity: Participants used low-fidelity materials (clay, wood, resin) to build a character or object for the "Future City of Atlanta"

Station 3: AI Station

Activity: Facilitators helped participants photograph their physical models. We then used RunwayML to "animate" these static objects based on the user's verbal prompts.

Observation: We observed how users negotiated with the AI. Did they accept the AI's interpretation? Did they get frustrated when the AI ignored the physical constraints of their clay model?.

Station 4: Interviews

To capture qualitative feedback in a chaotic festival environment, We designed a "Red Carpet" interview station. This framed the interview as a VIP experience.

Data Collection: We recorded 3 hours of video interviews with 43 participants, focusing on their emotional reaction to the AI's output.

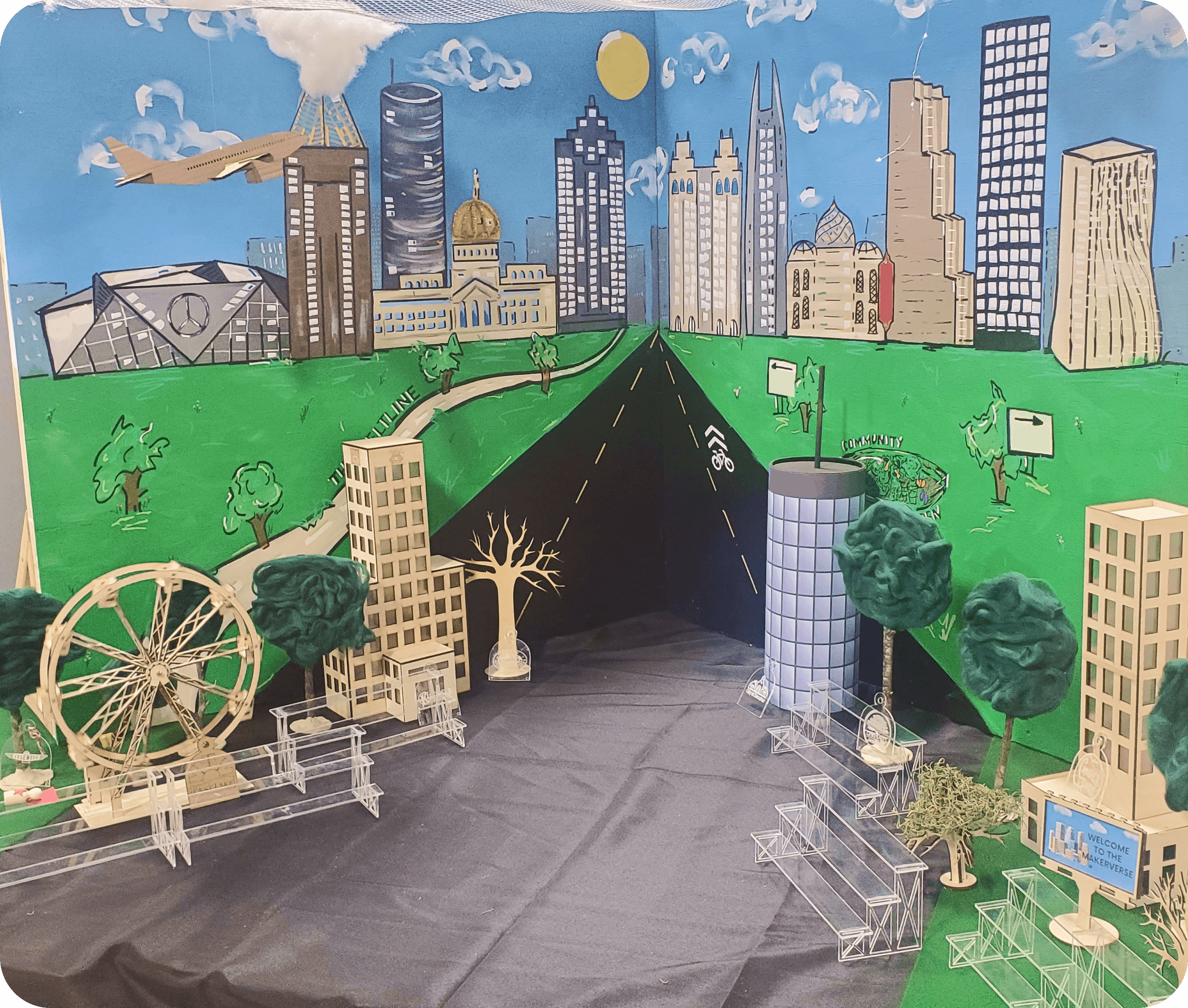

Station 5: The Diorama

Activity: Participants placed their physical artifacts onto a collective map of the city.

Method: This served as a real-time collective artifact. Users self-organized their creations into clusters (e.g., placing trees near housing, or robots near roads), revealing their mental models of urban zoning.

The intitial physical diorama built by makerspace which became a collective as people finished their hand made model.

Frames for the Ai generated video from Runway for one of the clay model, show a can land and grab an object

Analysis & Finding

Left: Participants showing their models after their interview at the red carpet. Right: Participants filling the intro questionnaire.

We utilized Reflexive Thematic Analysis to code the interview transcripts and survey data (N=118 questionnaires, 43 interviews). We looked beyond surface-level keywords to identify semantic patterns in how users described their agency.

Insight 1: Craft as Meaning Making

Users were far more forgiving of AI "hallucinations" (glitches) when the output was based on their own physical creation.

One participant noted, "AI changes the plan slightly but it's good" (EQ37). Another was delighted when the AI made their clay cat "stride" unexpectedly.

Insight 2: Beyond Technocentric Futures

Despite being in a high-tech "Maker" environment, the community overwhelmingly rejected "Cyberpunk" or technocentric futures.

60% of the artifacts created were nature-based (flowers, animals, trees). Participants explicitly stated desires for "more flowers and more parks" (P16) rather than flying cars or robots.

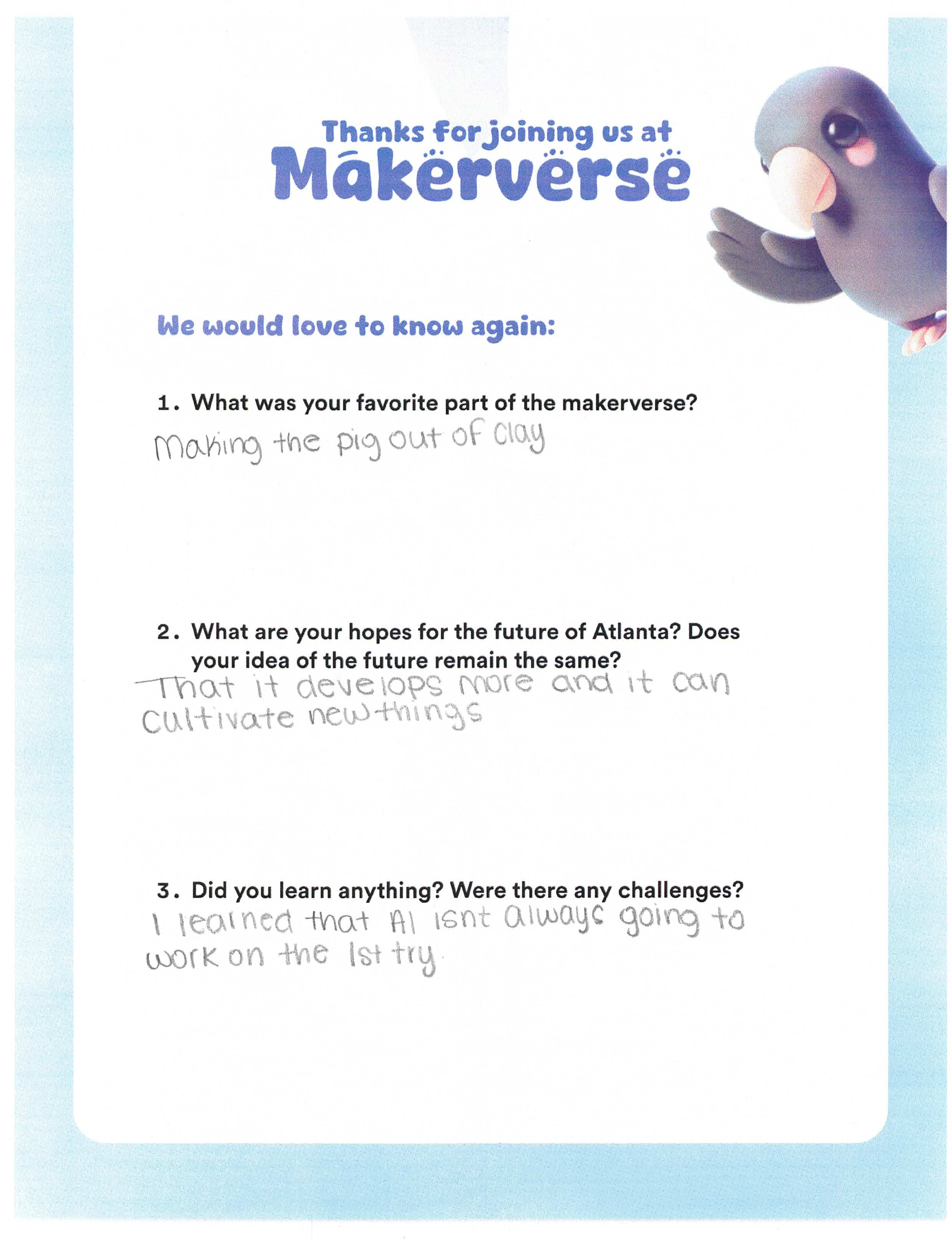

Insight 3: Imperfect AI

While users enjoyed the "surprise" of AI, they became frustrated when the AI overrode their core intent.

"I learned that AI isn't always going to work on the 1st try" (EQ43). Users viewed the AI as a "collaborator" that needed strict supervision.

A handwritten response from participant from exit questionnaire.

What We Learnt

The final diorama made into a Virtual version and emailed to participants