Playfutures

Workshops exploring Generative AI and Storytelling to imagine Public Places and Civic Visions

Playfutures

Workshops exploring Generative AI and Storytelling to imagine Public Places and Civic Visions

Playfutures

Workshops exploring Generative AI and Storytelling to imagine Public Places and Civic Visions

AI Literacy

Design Research

Qualitative Research

Participatory Design

AI Literacy

Design Research

Qualitative Research

Participatory Design

AI Literacy

Design Research

Qualitative Research

Participatory Design

Funding

Seed Grant by Atlanta Interdiscplinary AI Network 2024, PI: Noura Howell

Seed Grant by Atlanta Interdiscplinary AI Network 2024, PI: Noura Howell

Role

Lead Researcher, Facilitator

Tools

Chat GPT 4.0, Craft Materials

Timeline

August 2023 - Feb 2024 (6 months)

Methods

Participatory Making, Design Futuring, Making, Thematic Analysis

Team

Supratim Pait, Noura Howell, Michael Nitsche, Ashley Frith, Kalia Morrison, Jennifer Morrison, Sumita Sharma

Role

Lead Researcher, Facilitator

Methods

Participatory Making, Design Futuring, Making, Thematic Analysis

Tools

Chat GPT 4.0, Craft Materials

Team

Supratim Pait, Noura Howell, Michael Nitsche, Ashley Frith, Kalia Morrison, Jennifer Morrison, Sumita Sharma

August 2023 - Feb 2024 (6 months)

Timeline

Role

Lead Researcher, Facilitator

Tools

Chat GPT 4.0, Craft Materials

Timeline

August 2023 - Feb 2024 (6 months)

Methods

Participatory Making, Design Futuring, Making, Thematic Analysis

Team

Supratim Pait, Noura Howell, Michael Nitsche, Ashley Frith, Kalia Morrison, Jennifer Morrison, Sumita Sharma

Overview

PlayFutures is a participatory design project aimed at fostering critical AI literacy among children. In an era where AI reshapes communities without the input of younger generations, this project sought to give children a voice. By combining Generative AI (ChatGPT) with tangible puppet-making and performance, we created a 4-hour workshop model where children (aged 9-12) reimagined local civic spaces. The result was a validated method for "Design Futuring" that helps children transition from passive technology consumers to active, critical creators.

Overview

PlayFutures is a participatory design project aimed at fostering critical AI literacy among children. In an era where AI reshapes communities without the input of younger generations, this project sought to give children a voice. By combining Generative AI (ChatGPT) with tangible puppet-making and performance, we created a 4-hour workshop model where children (aged 9-12) reimagined local civic spaces. The result was a validated method for "Design Futuring" that helps children transition from passive technology consumers to active, critical creators.

Overview

PlayFutures is a participatory design project aimed at fostering critical AI literacy among children. In an era where AI reshapes communities without the input of younger generations, this project sought to give children a voice. By combining Generative AI (ChatGPT) with tangible puppet-making and performance, we created a 4-hour workshop model where children (aged 9-12) reimagined local civic spaces. The result was a validated method for "Design Futuring" that helps children transition from passive technology consumers to active, critical creators.

Problem

The Challenge: Current AI literacy efforts often focus heavily on technical skills, neglecting "child-specific embodiment" and critical examination. Children encounter AI daily but lack the agency to understand or influence how it impacts their physical communities.

The Goal: To develop a workshop framework that uses Design Futuring—imagining and crafting future scenarios—to scaffold critical thinking about AI, while simultaneously engaging children in civic discussions.

Problem

The Challenge: Current AI literacy efforts often focus heavily on technical skills, neglecting "child-specific embodiment" and critical examination. Children encounter AI daily but lack the agency to understand or influence how it impacts their physical communities.

The Goal: To develop a workshop framework that uses Design Futuring—imagining and crafting future scenarios—to scaffold critical thinking about AI, while simultaneously engaging children in civic discussions.

Problem

The Challenge: Current AI literacy efforts often focus heavily on technical skills, neglecting "child-specific embodiment" and critical examination. Children encounter AI daily but lack the agency to understand or influence how it impacts their physical communities.

The Goal: To develop a workshop framework that uses Design Futuring—imagining and crafting future scenarios—to scaffold critical thinking about AI, while simultaneously engaging children in civic discussions.

Methodology

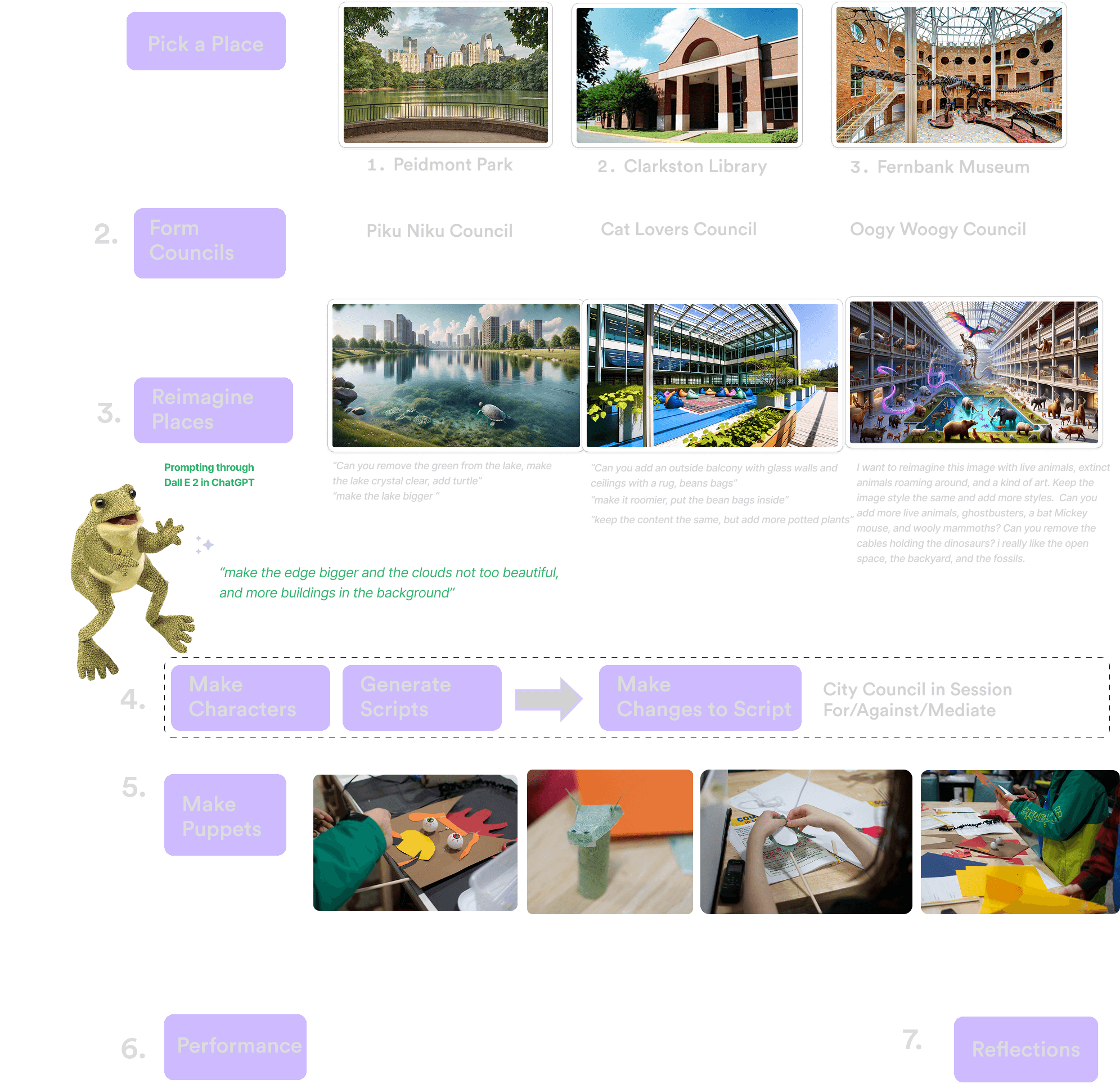

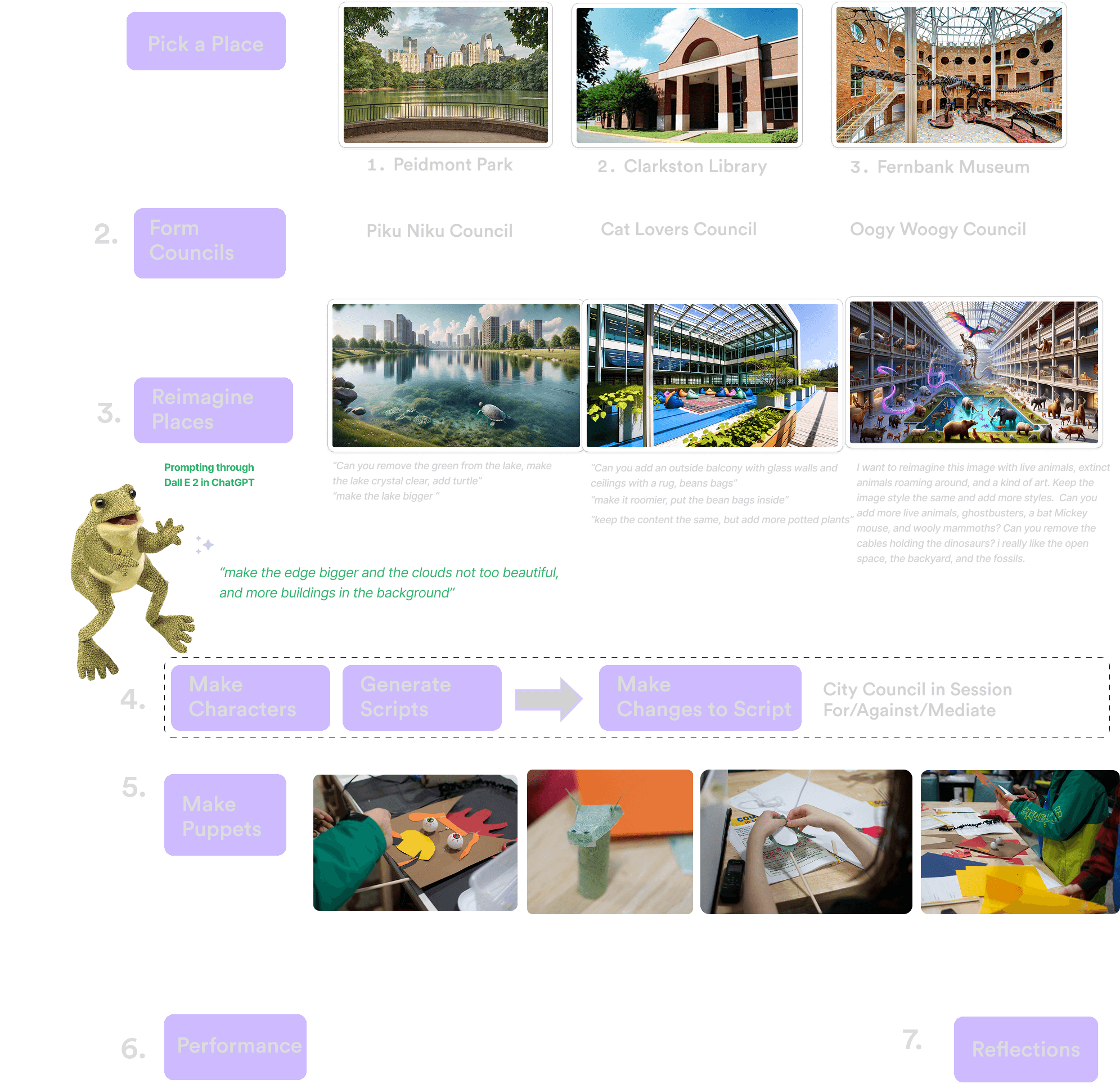

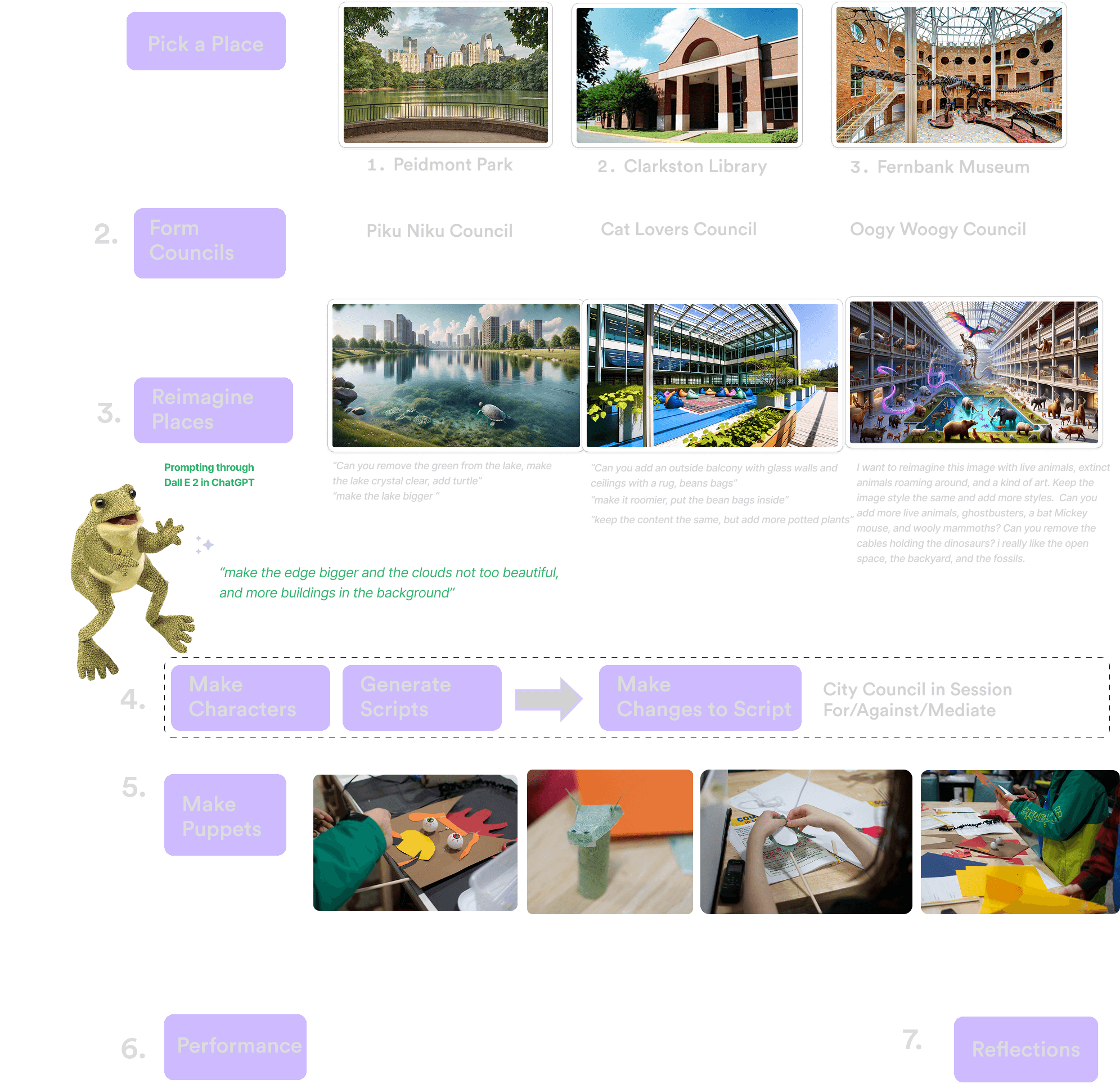

We designed a 4-hour workshop piloted in a community makerspace in Atlanta, GA.

Target Audience:

Users: Children aged 9–12.

Participants: n=7 (Mixed background: 5 traditional school, 2 home school; Mean age: 10.42).

The Hybrid Workflow: We utilized a "Hybrid" approach, blending high-tech Generative AI with low-tech tangible crafting to ground the abstract concepts of AI.

Phase 1: AI Visualization (45 min) Teams selected local spaces (Library, Park, Museum) and used ChatGPT to generate images of their "future vision" for these locations.

Phase 2: Script & Materiality (120 min) Participants used ChatGPT to generate debate scripts for city council members discussing these changes. They then hand-crafted physical puppets to represent these viewpoints.

Phase 3: Performance (45 min) Children performed the debates using their puppets, with the AI-generated images serving as digital backdrops.

Methodology

We designed a 4-hour workshop piloted in a community makerspace in Atlanta, GA.

Target Audience:

Users: Children aged 9–12.

Participants: n=7 (Mixed background: 5 traditional school, 2 home school; Mean age: 10.42).

The Hybrid Workflow: We utilized a "Hybrid" approach, blending high-tech Generative AI with low-tech tangible crafting to ground the abstract concepts of AI.

Phase 1: AI Visualization (45 min) Teams selected local spaces (Library, Park, Museum) and used ChatGPT to generate images of their "future vision" for these locations.

Phase 2: Script & Materiality (120 min) Participants used ChatGPT to generate debate scripts for city council members discussing these changes. They then hand-crafted physical puppets to represent these viewpoints.

Phase 3: Performance (45 min) Children performed the debates using their puppets, with the AI-generated images serving as digital backdrops.

Methodology

We designed a 4-hour workshop piloted in a community makerspace in Atlanta, GA.

Target Audience:

Users: Children aged 9–12.

Participants: n=7 (Mixed background: 5 traditional school, 2 home school; Mean age: 10.42).

The Hybrid Workflow: We utilized a "Hybrid" approach, blending high-tech Generative AI with low-tech tangible crafting to ground the abstract concepts of AI.

Phase 1: AI Visualization (45 min) Teams selected local spaces (Library, Park, Museum) and used ChatGPT to generate images of their "future vision" for these locations.

Phase 2: Script & Materiality (120 min) Participants used ChatGPT to generate debate scripts for city council members discussing these changes. They then hand-crafted physical puppets to represent these viewpoints.

Phase 3: Performance (45 min) Children performed the debates using their puppets, with the AI-generated images serving as digital backdrops.

A flow diagram of how the workshop unfolded

A flow diagram of how the workshop unfolded

A flow diagram of how the workshop unfolded

Left to Right: Snippets from the final puppet performance of Piku Niku Council at the Park, The Cat Lovers council at the library and the Oogy Woogy council at the museum.

Observations

4.1 Ideation (Civic Futures)

Children proposed optimistic, highly specific changes to their environment:

Group 1 (Library): More natural light, comfortable outdoor seating.

Group 2 (Museum): "More life," specifically requesting a "rainbow wooly mammoth."

Group 3 (Park): A cleaner, larger lake with teeming wildlife (environmental focus).

4.2 Prototyping (The AI "Friction")

The users treated AI not as an oracle, but as a "faulty process tool."

Observation: Group 3 attempted to fuse images to create a specific creature. The AI failed to produce the "rainbow wooly mammoth" on the second attempt.

User Feedback: Participants described the tool as "surprising, confusing, and mean," highlighting the gap between their imagination and the AI's capability.

4.3 Iteration (Reclaiming Agency)

The most significant UX finding occurred during the script generation phase.

Behavior: Children rejected the AI-generated scripts for lacking "soul."

Action: One team improvised their entire performance because the generated text felt "really AI-y." Another team cut two full lines of dialogue because "It wasn't their personality. It sounded unnatural."

Observations

4.1 Ideation (Civic Futures)

Children proposed optimistic, highly specific changes to their environment:

Group 1 (Library): More natural light, comfortable outdoor seating.

Group 2 (Museum): "More life," specifically requesting a "rainbow wooly mammoth."

Group 3 (Park): A cleaner, larger lake with teeming wildlife (environmental focus).

4.2 Prototyping (The AI "Friction")

The users treated AI not as an oracle, but as a "faulty process tool."

Observation: Group 3 attempted to fuse images to create a specific creature. The AI failed to produce the "rainbow wooly mammoth" on the second attempt.

User Feedback: Participants described the tool as "surprising, confusing, and mean," highlighting the gap between their imagination and the AI's capability.

4.3 Iteration (Reclaiming Agency)

The most significant UX finding occurred during the script generation phase.

Behavior: Children rejected the AI-generated scripts for lacking "soul."

Action: One team improvised their entire performance because the generated text felt "really AI-y." Another team cut two full lines of dialogue because "It wasn't their personality. It sounded unnatural."

Observations

4.1 Ideation (Civic Futures)

Children proposed optimistic, highly specific changes to their environment:

Group 1 (Library): More natural light, comfortable outdoor seating.

Group 2 (Museum): "More life," specifically requesting a "rainbow wooly mammoth."

Group 3 (Park): A cleaner, larger lake with teeming wildlife (environmental focus).

4.2 Prototyping (The AI "Friction")

The users treated AI not as an oracle, but as a "faulty process tool."

Observation: Group 3 attempted to fuse images to create a specific creature. The AI failed to produce the "rainbow wooly mammoth" on the second attempt.

User Feedback: Participants described the tool as "surprising, confusing, and mean," highlighting the gap between their imagination and the AI's capability.

4.3 Iteration (Reclaiming Agency)

The most significant UX finding occurred during the script generation phase.

Behavior: Children rejected the AI-generated scripts for lacking "soul."

Action: One team improvised their entire performance because the generated text felt "really AI-y." Another team cut two full lines of dialogue because "It wasn't their personality. It sounded unnatural."

Findings & Insights

Through reflexive thematic analysis of 4 hours of video/audio and 7 activity booklets, three core themes emerged:

1. AI is an "Imperfect Tool" for Design

Children quickly demystified the technology. Instead of viewing AI outputs as final solutions, they treated them as rough drafts. The "glitches" in the AI (e.g., failing to generate specific animal features) became teaching moments about the limitations of technology.

2. Preference for Authenticity

There was a strong user preference for authentic voice over automated efficiency.

Data Point: Only one team agreed with the AI-generated ending. The other two teams completely rewrote the conclusions to better fit their narrative goals.

Insight: Children are capable of distinguishing between "AI-like" content and human creativity, and they actively curate against the former.

3. Tangible Anchors Scaffolding Criticality

The physical act of making puppets provided a necessary counterbalance to the screen-based AI work. It allowed the children to step back from the interface and critique the digital output through the physical performance

Findings & Insights

Through reflexive thematic analysis of 4 hours of video/audio and 7 activity booklets, three core themes emerged:

1. AI is an "Imperfect Tool" for Design

Children quickly demystified the technology. Instead of viewing AI outputs as final solutions, they treated them as rough drafts. The "glitches" in the AI (e.g., failing to generate specific animal features) became teaching moments about the limitations of technology.

2. Preference for Authenticity

There was a strong user preference for authentic voice over automated efficiency.

Data Point: Only one team agreed with the AI-generated ending. The other two teams completely rewrote the conclusions to better fit their narrative goals.

Insight: Children are capable of distinguishing between "AI-like" content and human creativity, and they actively curate against the former.

3. Tangible Anchors Scaffolding Criticality

The physical act of making puppets provided a necessary counterbalance to the screen-based AI work. It allowed the children to step back from the interface and critique the digital output through the physical performance

Findings & Insights

Through reflexive thematic analysis of 4 hours of video/audio and 7 activity booklets, three core themes emerged:

1. AI is an "Imperfect Tool" for Design

Children quickly demystified the technology. Instead of viewing AI outputs as final solutions, they treated them as rough drafts. The "glitches" in the AI (e.g., failing to generate specific animal features) became teaching moments about the limitations of technology.

2. Preference for Authenticity

There was a strong user preference for authentic voice over automated efficiency.

Data Point: Only one team agreed with the AI-generated ending. The other two teams completely rewrote the conclusions to better fit their narrative goals.

Insight: Children are capable of distinguishing between "AI-like" content and human creativity, and they actively curate against the former.

3. Tangible Anchors Scaffolding Criticality

The physical act of making puppets provided a necessary counterbalance to the screen-based AI work. It allowed the children to step back from the interface and critique the digital output through the physical performance

Reflections

PlayFutures demonstrated that engaging children in AI literacy requires more than just coding tutorials. By integrating Participatory Design and Performance, we successfully created an environment where children could critique AI.

The study proves that when users (even young ones) are given the license to edit and override AI, they prioritize their own agency and creative vision, treating AI as a supportive—but fallible—tool rather than an authority.

Reflections

PlayFutures demonstrated that engaging children in AI literacy requires more than just coding tutorials. By integrating Participatory Design and Performance, we successfully created an environment where children could critique AI.

The study proves that when users (even young ones) are given the license to edit and override AI, they prioritize their own agency and creative vision, treating AI as a supportive—but fallible—tool rather than an authority.

Reflections

PlayFutures demonstrated that engaging children in AI literacy requires more than just coding tutorials. By integrating Participatory Design and Performance, we successfully created an environment where children could critique AI.

The study proves that when users (even young ones) are given the license to edit and override AI, they prioritize their own agency and creative vision, treating AI as a supportive—but fallible—tool rather than an authority.